MAP ESTIMATES¶

Priors, Bayes rule and MAP¶

- Last class we dealt with maximising $ \theta $ wrt $ L(x_1, x_2, ... x_n| \theta) $ to obtain a point estimate for $ \theta $ called as the MLE estimate.

- Lets use the shorthand $ P(D|\theta) $ for the likelihood term

- Now let's assume that we have some beliefs on the value of $ \theta $. For example, if you are tossing a coin and you believe that the coin is extremely biased to either heads or tails. If you represent your beliefs in terms of a probability distribution, you get the prior distribution on $ \theta$. Let's call it $ P(\theta)$

By Bayes' rule we have,

$$ P( \theta | D ) = \frac{ P ( D | \theta) P ( \theta )}{ P( D )} $$

This is called the posterior distribution of $ \theta $

- Maximum a posteriori estimate:

$$ \theta_{MAP} = arg max_{\theta} P(\theta | D) $$

- Compare it to MLE:

$$ \theta_{MLE} = arg max_{\theta} P( D | \theta) $$

Hypothesis and Data¶

- Discrete hypothesis and Discrete data

- Continuous hypothesis and Discrete data

Homework (Slides)

- Discrete hypothesis and Continuous data

- Continuous hypothesis and Continuous data

Discrete hypothesis and Discrete data¶

<img src="./files/dis_dis.png" width = 80%/>

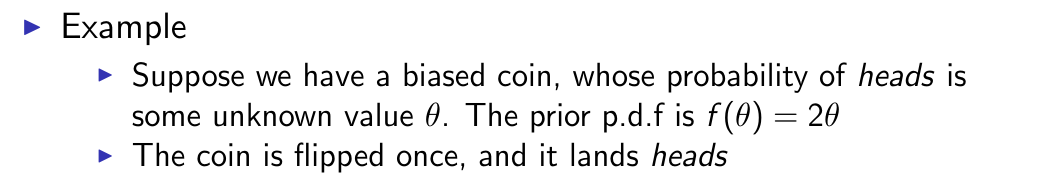

What is the MAP here?

What is the MLE here?

Beta distribution¶

Let $ X \sim Beta(\alpha, \beta). $

Then $$ P[X=x] = \frac{x^{\alpha - 1} (1 - x)^{\beta - 1} \Gamma(\alpha + \beta)}{\Gamma(\alpha) \Gamma(\beta)} $$

where $$ \Gamma(x) = \int_{0}^{∞} t^{x-1} e^{-t} dt $$

Remember $ \Gamma(n) = (n - 1) ! $ for natural number n

Problems:¶

- What is the value of X for which P[X=x] is maximized? What if $ \alpha = \beta $?

- What is the expected value of X?

Check distribution shape

$ Beta(1, 1) $

- What happens when alpha increases keeping beta fixed?

$ Beta (2, 1) $

$ Beta (5, 1) $

$ Beta(10, 1) $

- When would you use this as a prior?

$ Beta(0.5, 0.5) $

Example:¶

Prior: $ Beta(\alpha, \beta) $

Observed data: s heads, t tails

Find the posterior.

Find the MAP estimate of $ \theta $

Why Beta distribution? Conjugate priors